1. Given a sorted (in increasing order) array A[1..n] of distinct integers, (a) use the divide-and-conquer technique to design an algorithm that in o(n) (i.e. sublinear) time, determines whether there exists an i, 1 ≤ i ≤ n, such that A[i] = i; For example, for array [2, 3, 9, 11, 19], the answer is no while the answer is yes for [−10, −5, 1, 4, 10, 20, 30, 50] since A[4] = 4. (2) let t(n) be the running time of your algorithm, give a recurrence for t(n) and solve it using all four different methods covered. Solutions to incorrect recurrence will only get partial credit. You can use this and the next page for Q1. Describe your algorithm in English or very high level pseudo code.

2. Consider the chained matrix multiplication problem covered in class where we are supposed to find an optimal order by which to calculate M = M1 × M2 × ··· × Mn. For the following suggested greedy idea, provide a counter example where the greedy solution does not work: First multiply the matrices Mi and Mi+1 whose common dimension ri is smallest, and continue in the same way (note that Mi is a ri−1 by ri matrix).

3. In the following, NPC is the set of all NP-complete problems, A, B, and C problems, and Φ the empty set. Write True or False for each of the statements. No explanations are required.

(a) If P ∩ NPC ≠ Φ, then P = NP

(b) P ⊆ NP

(c) If A ≤p B and B ≤p C, then A ≤p C

(d) NP stands for non-polynomial

(e) An NP-complete problem is the hardest problem in NP

(f) If one NP-complete problem is solvable in polynomial time deterministically, then so are all NP-complete problems

(g) All NP-complete problems are polynomially reducible to each other

(h) Dynamic programming is applicable to any problem

(i) Greedy algorithms never find optimal solutions

(j) Greedy algorithms always find optimal solutions

4.

(a) The 0-1 knapsack problem (decision), covered in class, is given as follows: given n objects each with a value vi and weight wi, knapsack capacity C, and value k, is there a solution with total value ≥ k. The subset sum problem, also covered in class, is defined as follows:

Given a set of integers S = {x1, x2, ··· , xn} and an integer b, does there exist a subset S′ ⊆ S such that Σxi∈S′xi = b. This problem is known to be NP-complete (shown in class). Show that the 0-1 knapsack problem (decision version) is also NP-complete.

(b) Even though the subset sum problem is NP-complete in general, some special cases are solvable in polynimial time. Consider the following case where the input S = {x1, x2, ··· , xn} is superincreasing, i.e. each number exceeds the sum of the preceeding numbers: xj > Σj−1 for j = 2, 3, ···, n. Give a simple linear algorithm that solves this version of the subset sum problem. Describe your algorithm in English or very high level pseudo code. Also justify its time.

5.

(a) Compare dynamic programming with divide-and-conquer: What is the principal difference between the two techniques?

(b) Why is the Principle of Optimality important for dynamic programming method?

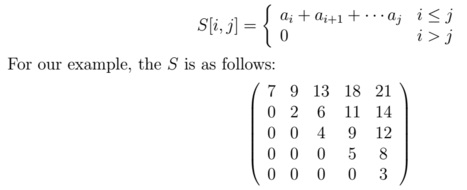

(c). Adding up n integers. Given a sequence of integers a1, a2, ..., an, and we want to compute a1 + a2 + ... + an. Of course, this is done by n − 1 additions. Suppose that each time, we have to add two neighboring elements, and the cost of adding two numbers a and b is the value a + b. In addition, we cannot rearrange the order of the numbers in the input. For example, for input 7, 2, 4, 5, 3, one way of computing the sum is by the following series of operations: 7 + (2 + 4) + 5 + 3 → 7 + 6 + (5 + 3) → (7 + 6) + 8 → (13 + 8) → 21 with a cost of 6+8+13+21 = 48. On the other hand, the following series of operations (7 + 2) + 4 + 5 + 3 → (9 + 4) + 5 + 3 → (13 + 5) + 3 → (18 + 3) → 21 has a cost of 9+13+18+21 = 61. The goal is to find cheapest way to add up these n numbers using the dynamic programming method. We assume that matrix S is known where S[i, j] is defined as follows (note that even though S[1, n] = Σn the problem by dynamic programming): i=1ai, the purpose of this question is to solve:

Give a dynamic programming algorithm for computing the cheapest cost of adding n positive integers: Define a cost function, verify that the principle of optimality holds, and give a recurrence on next page.